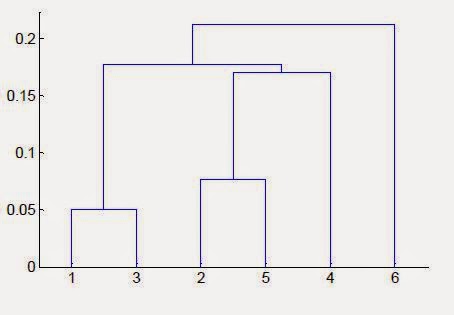

- Produces a set of nested clusters

- organized as a hierarchical tree

- Can be visualized as a dendrogram

- a tree like diagram that records the split

2. Strengths of Hierarchical Clustering

- Do not have to assume any particular number of clusters

- any desired number of clusters can be obtained by "cutting" the dendogram at the proper level

- They may correspond to meaning taxonomies

- example in biological sciences (e.g., animal kingdom, phylogeny reconstruction, ...)

3. Two main types of Hierarchical Clustering

- Agglomerative

- Start with the points as individual clusters

- At each step, merge the closest pair of clusters until only one cluster (or k clusters) left.

- Divisive

- Start with one, all-inclusive cluster

- At each step, split a cluster until each cluster contains a point (or there are k clusters)

4. Inter-Cluster Similarity

- Min

- strength: can handle non-elliptical shapes

- weakness: sensitive to noise and outliers

- Max

- strength: less susceptible to noise and outliers

- weakness: tend to break large clusters; biased towards globular clusters

- Group Average

- proximity of two clusters is the average of pairwise similarity between points in the two clsuters

$Proximity = \frac{\sum_{p_i \in Cluster_i, P_j \in cluster_j}{Proximity(p_i, p_j)}}{|Cluster_i||Cluster_j|}$

- Strongness: less susceptible to noise and outliers

- Weakness: biased towards globular clusters

- Distance between centroids

- Other methods driven by an objective function

- Ward's method users squared error

- Similarity of two clusters is based on the increase in squared error when two clusters are merged

- Similar to gorup average if distance between points is distance squared.

- Strongness: less susceptible to noise and outliers

- Weakness:

- Biased towards globular clusters

- Hierarchical analogue of K-means

5. Hierarchical Clustering: Problems and Limitations

- Once a decision is made to combine two clusters, it cannot be undone

- No objective function is directly minimized

- Different schemes have problems with one or more of the following

- sensitivity to noise and outliers

- difficulty handling different sized clusters and convex shapes

- breaking large clusters

6. MST: Divisive Hierarchical Clustering

No comments:

Post a Comment